Embedded Vision systems are known to be quick and efficient. These systems can be built using a system on chip (SoC) controller based on ARM architecture or on a field-programmable gate array (FPGA) architecture. In general, systems built on ARM based SoC implement vision algorithms as software and lack the capability to scale when it comes to high performance low latency applications. Hardware-based image processing systems (ASIC or DSP based modules) are very fast, and work well once they are programmed. However, the biggest challenge with hardware systems is their relative inflexibility. It gets difficult for developers to reprogram the system in case of any change in the application.

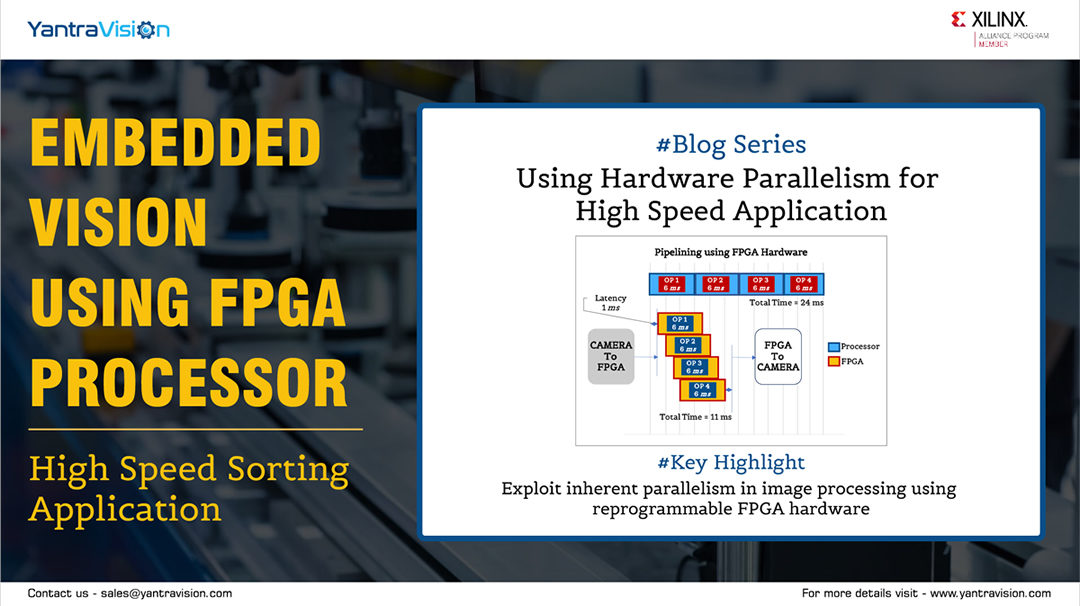

FPGAs combine the parallel nature of hardware and flexibility of software such that their functionality can be reprogrammed when needed. In the early days, FPGAs had very less processing power and to implement an image processing system more than one processor was needed. As the processing power of FPGAs increased over the years, hybrid systems were developed where FPGAs were used as vision accelerators. Modern day FPGAs have the capability and resources to implement the entire application on a single FPGA. This makes FPGAs a good choice for embedded real-time vision systems especially in high speed applications.

Real-time Image Processing

Real-time image processing systems help users to capture images, analyse them and use the result to control a specific activity within a specific cycle time. There are two types of real-time systems – hard and soft. If the process does not complete within the cycle time and the system fails, it is known as a hard real-time system. The best example of a hard real-time system is using vision to grade items on a conveyor belt. These systems have to manage both image processing and rejection within the available cycle time. In case of the soft real-time system, the system does not fail completely, and the result will still be generated, but will not meet the quality standard. An example for soft real-time system is video transmission via the internet.

One of the major challenges faced by real-time image processing systems is when the time gap between events is less than the required response time. Example: conveyor belt to grade food items – the time between objects on the conveyor might be less than the time between the inspection point and the actuator. There are two ways of handling this scenario. First, constrain all processing to happen during the time between the successive items arriving at the inspection point. This provides a much tighter real-time constraint. If this constraint cannot be achieved, the second way is to use parallel processing to maintain the original time constraint. However, the execution will have to be spread across multiple processors. Therefore, by increasing the throughput to meet the event rate, the time constraint can be met.

Using FPGAs for Real-time Image Processing

FPGAs implement the application logic by building separate hardware for each function. This gives FPGAs the speed that results from a hardware design while retaining the reprogrammable flexibility of software. Therefore, FPGAs are best suited for real-time image processing, especially when they can take full advantage of the inherent parallelism in images.

Case Study: Embedded Vision Using FPGA Processor

In our real-time experience with one of the clients in the high-speed sorting OEM industry, the available cycle time starting from the sorting decision to actuation of the pneumatic ejectors was only 3 ms. The original design included microcontrollers that were only capable of performing simple thresholding techniques within the stipulated cycle time. This restricted the quality of inspection on the end products. We implemented an image processing algorithm with a FPGA processor exploiting parallelism to achieve more complex image processing within the same cycle time. Click here (share case study link) to read the case study in detail.

Next article will elaborate on how FPGA is wellsuited for Image processing by exploting Parallelism inherent in Image Processing.

Case Study: Embedded Vision Using FPGA Processor